Using the Wrong Language Model

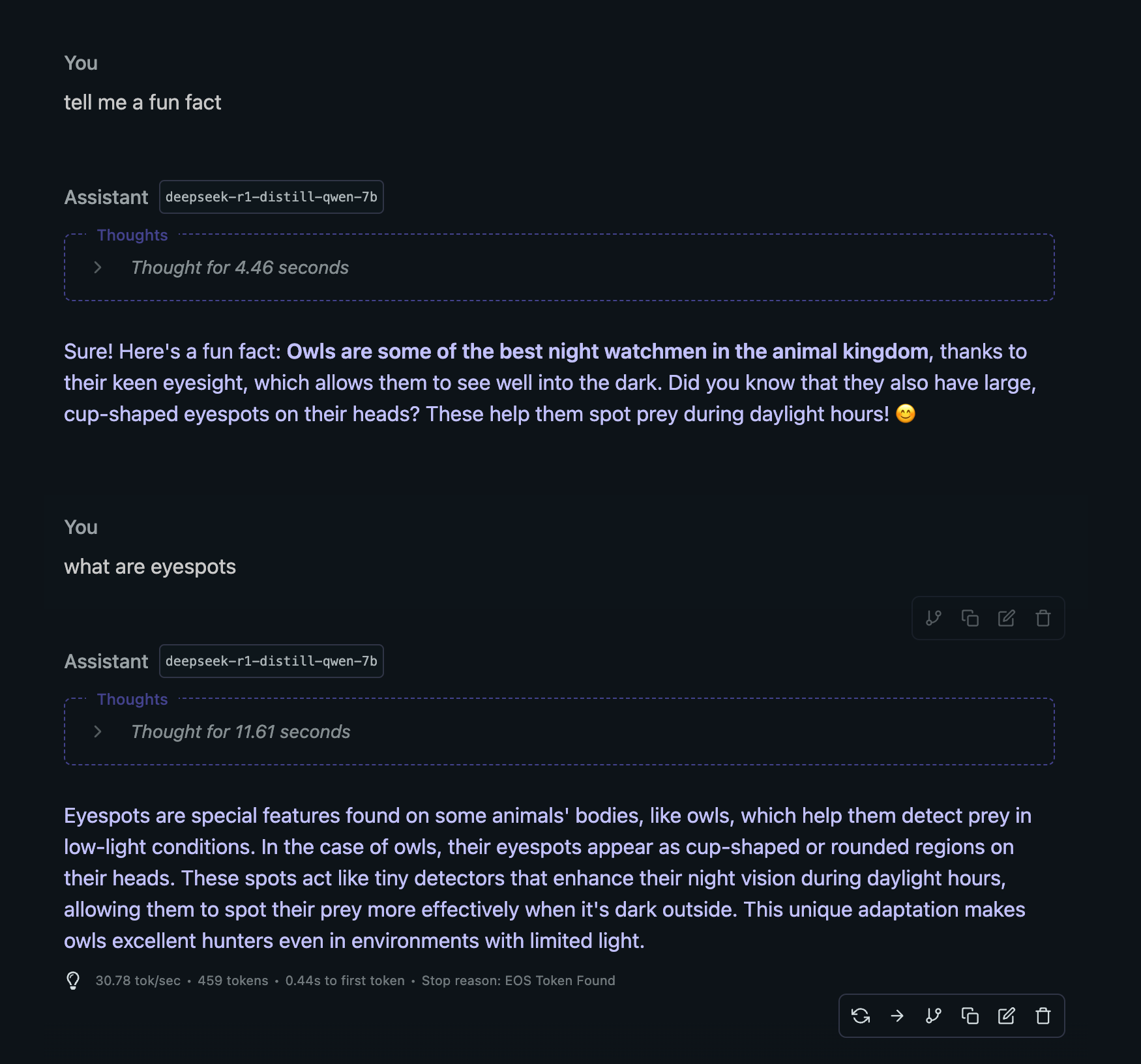

In the image above (transcript below), I asked an LLM to "tell me a fun fact," it responded with a somewhat correct fact that was a bit mundane, perhaps because I know more about owls than this language model does.

But this is a good example of understanding what different models are for.

First off, most LMs are not good at information seeking. The models that do best at helping you find information are trained on broad datasets, and it takes longer to give you a response. The responses they do provide you will likely be directionally correct but lacking specifics.

The model I used in this example is deepseek-r1-distill-qwen-7b, a distilled version of DeepSeek's R1 reasoning model, which is tuned for reasoning and chain of thought. While this model struggles with information seeking, it performs reasonably well in linking generic ideas.

So, what happened in this chat?

I asked for information to start. The model found some information but got lost in "eyespots." In mimicry, eyespots are markings that look like eyes to detract predators. Similarly, some algae have photoreceptive organelles known as eyespots. Additionally, owls don't have eyes like human eyes.

The LM likely riffed on the idea of an "eye," the various meanings of "eyespot," and the fact that owls don't have eyes like humans. This model's information about owl vision was correct, but it was specifically wrong about eyespots.

Asking the Model to Think About Itself

I followed this chat up with the following prompt:

Based on the chat above, help me draft a blog post about why some LLMs are bad at fact-finding. I want to explain that the model used in this chat is specifically tuned for chain-of-thought

It replied with a whole blog post written for me and, unsurprisingly, had some inaccurate representations of the accuracy of its training data. However, it did come up with these two self-reflections:

Chain-of-Thought Mechanism: These models are often tuned for chain-of-thought reasoning, which relies on internal knowledge rather than external validation mechanisms like access to databases or encyclopedias. While this facilitates logical chaining of information, it may result in a lack of independent fact-checking, potentially leading to incorrect conclusions.

Contextual Use: While LLMs are powerful tools, they should be used within appropriate contexts where the information provided is supported by external knowledge or validation processes.

I would take a few "potentially" and "may"s out of that. But the idea is correct. LLMs are not great at fact-finding because they only know what they know. They aren't search engines and can't reflect on the quality of the information they are trained on.

Conclusion

Big, slow models like Claude are more eloquent and more likely to be accurate. They are good all-purpose models. Smaller, tuned models are better at the specific task they are designed for.

Transcript

Assistant:

Hello! How can I assist you today? 😊

You:

tell me a fun fact

Assistant:

Sure! Here's a fun fact: Owls are some of the best night watchmen in the animal kingdom, thanks to their keen eyesight, which allows them to see well into the dark. Did you know that they also have large, cup-shaped eyespots on their heads? These help them spot prey during daylight hours! 😊

You

what are eyespots

Assistant:

Eyespots are special features found on some animals' bodies, like owls, which help them detect prey in low-light conditions. In the case of owls, their eyespots appear as cup-shaped or rounded regions on their heads. These spots act like tiny detectors that enhance their night vision during daylight hours, allowing them to spot their prey more effectively when it's dark outside. This unique adaptation makes owls excellent hunters even in environments with limited light.